这次尝试下怎样搜索电影并解析出磁力链接信息。

搜索的网址是:https://www.torrentkitty.tv/search/。

开始了!

使用FireFox打开上面的网址,输入要搜索的电影。在点击搜索按钮前记得打开FireBug,并激活“网络”页签。

查看了请求的详情有些哭笑不得:点击搜索按钮后网页跳转到了这样的地址:https://www.torrentkitty.tv/search/蝙蝠侠/——很明显的REST风格的请求。这样,我们要搜什么内容直接将要搜索的内容拼装到请求地址中就行了。搜索的代码是这样的:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

#!python # encoding: utf-8 from urllib import request def get(url): response = request.urlopen(url) content = "" if response: content = response.read().decode("utf8") response.close() return content def main(): url = "https://www.torrentkitty.tv/search/蝙蝠侠/" content = get(url) print(content) if __name__ == "__main__": main() |

执行后报错了,报错信息如下:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

Traceback (most recent call last): File "D:/PythonDevelop/spider/grab.py", line 22, in <module> main() File "D:/PythonDevelop/spider/grab.py", line 17, in main content = get(url) File "D:/PythonDevelop/spider/grab.py", line 7, in get response = request.urlopen(url) File "D:\Program Files\python\python35\lib\urllib\request.py", line 162, in urlopen return opener.open(url, data, timeout) File "D:\Program Files\python\python35\lib\urllib\request.py", line 465, in open response = self._open(req, data) File "D:\Program Files\python\python35\lib\urllib\request.py", line 483, in _open '_open', req) File "D:\Program Files\python\python35\lib\urllib\request.py", line 443, in _call_chain result = func(*args) File "D:\Program Files\python\python35\lib\urllib\request.py", line 1268, in http_open return self.do_open(http.client.HTTPConnection, req) File "D:\Program Files\python\python35\lib\urllib\request.py", line 1240, in do_open h.request(req.get_method(), req.selector, req.data, headers) File "D:\Program Files\python\python35\lib\http\client.py", line 1083, in request self._send_request(method, url, body, headers) File "D:\Program Files\python\python35\lib\http\client.py", line 1118, in _send_request self.putrequest(method, url, **skips) File "D:\Program Files\python\python35\lib\http\client.py", line 960, in putrequest self._output(request.encode('ascii')) UnicodeEncodeError: 'ascii' codec can't encode characters in position 10-12: ordinal not in range(128) |

根据错误栈信息可以看出是在发送http请求时报错的,是因为编码导致的错误。在python中使用中文经常会遇到这样的问题。因为是在http请求中出现的中文编码异常,所以可以考虑使用urlencode加密。

在python中对字符串进行urlencode使用的是parse库的quote方法,而非是urlencode方法:

|

1 2 3 |

def main(): url = "https://www.torrentkitty.tv/search/" + parse.quote("蝙蝠侠") content = get(url) |

再次执行请求,依然报错了:

|

1 |

urllib.error.HTTPError: HTTP Error 403: Forbidden |

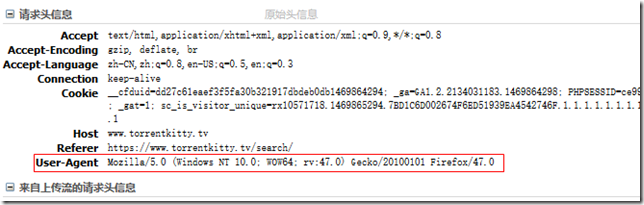

报的是HTTP 403错误。这样的错误我遇到过几次,一般是因为没有设置UserAgnet,是网站屏蔽爬虫抓取的一种方式。通过FireBug可以从headers中获取到User-Agent信息:

获取到header信息后再调整下我们的代码,这次会需要使用一个新的类Request:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

def get(url, _headers): req = request.Request(url, headers=_headers) response = request.urlopen(req) content = "" if response: content = response.read().decode("utf8") response.close() return content def main(): headers = {"User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:43.0) Gecko/20100101 Firefox/43.0"} url = "https://www.torrentkitty.tv/search/" + parse.quote("蝙蝠侠") content = get(url, headers) |

修改后依然在报错:

|

1 |

socket.timeout: The read operation timed out |

请求超时了,估计是因为网站在境外的缘故。所以还需要设置一个请求超时时间,只需要添加一个参数:

|

1 |

response = request.urlopen(req, timeout=120) |

这样调整后终于请求成功了。需要强调下,这里的超时设置的时间单位是秒。

总结下吧,这次一共遇到了三个问题:

- 中文编码的问题;

- HTTP403错误的问题;

- 请求超时时间设置的问题。

完整的代码在这里,稍稍作了些调整,还添加了post请求的代码。在pot请求的代码中对字典型的参数调用了urlencode方法:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

#!python # encoding: utf-8 from urllib import request from urllib import parse DEFAULT_HEADERS = {"User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:43.0) Gecko/20100101 Firefox/43.0"} DEFAULT_TIMEOUT = 120 def get(url): req = request.Request(url, headers=DEFAULT_HEADERS) response = request.urlopen(req, timeout=DEFAULT_TIMEOUT) content = "" if response: content = response.read().decode("utf8") response.close() return content def post(url, **paras): param = parse.urlencode(paras).encode("utf8") req = request.Request(url, param, headers=DEFAULT_HEADERS) response = request.urlopen(req, timeout=DEFAULT_TIMEOUT) content = "" if response: content = response.read().decode("utf8") response.close() return content def main(): url = "https://www.torrentkitty.tv/search/" get_content = post(url, q=parse.quote("蝙蝠侠")) print(get_content) get_content = get(url) print(get_content) if __name__ == "__main__": main() |

就是这样。这次本来是想说些关于网页解析的内容的,不过后来发现还是有很多的内容需要先说明下才好进行下去。关于网页解析的内容就挪到了下一节。

这里有篇文章不错,说明了urllib中几个常见的问题:http://www.cnblogs.com/ifso/p/4707135.html

##########

发表评论